What is KNN:

Supervised learning algorithm for classification.

Some assumptions:

- Real world data will not be this nice

- Assuming that the features that we extracted to represent data are good, we can assume that there will be some form of clustering for each class.

More formally,

Given and , we can distribute X such that:

and

Meaning: All points must be clustered and no point can be clustered to more than one class

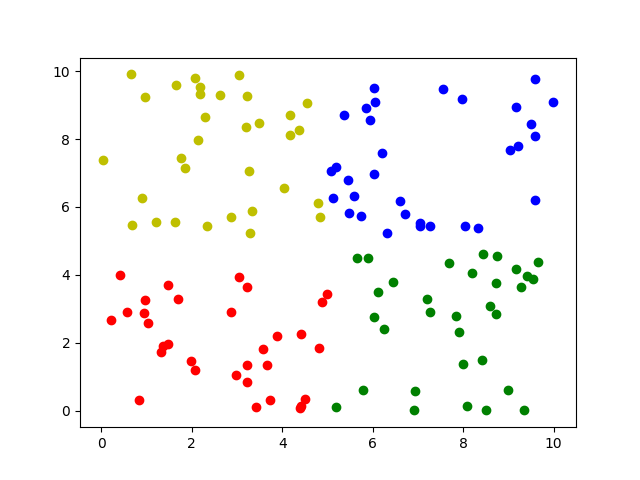

Visualize the data

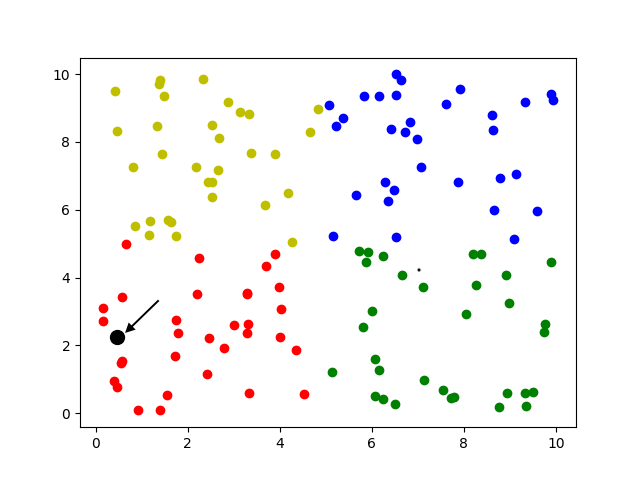

Task

Image you are given data D = ( , ) where and , Can you find the value of y for a new arbitrary test point (in black)

Training (sort of)

Intuitively:

- At every new data point, we ask “Which are the closest k points to this”

- Then, we predict the label by looking at those k things and seeing which y shows up the most

- If everything is tied, just take the closest one

Given a new test point , we can predict the label ( y_t ) by:

- Compute the Euclidean distance between ( x_t ) and each ( x_i ) in the D:

- Identify the k nearest neighbors by selecting the k smallest distances: where correspond to the indices of the nearest neighbors

- Predict the label by majority voting among the labels of the ( k ) nearest neighbors: where is the indicator function:

Note for ties: If there is a tie among labels, select the label with the closest distance to Formally, let be the set of labels tied for the majority vote. Then:

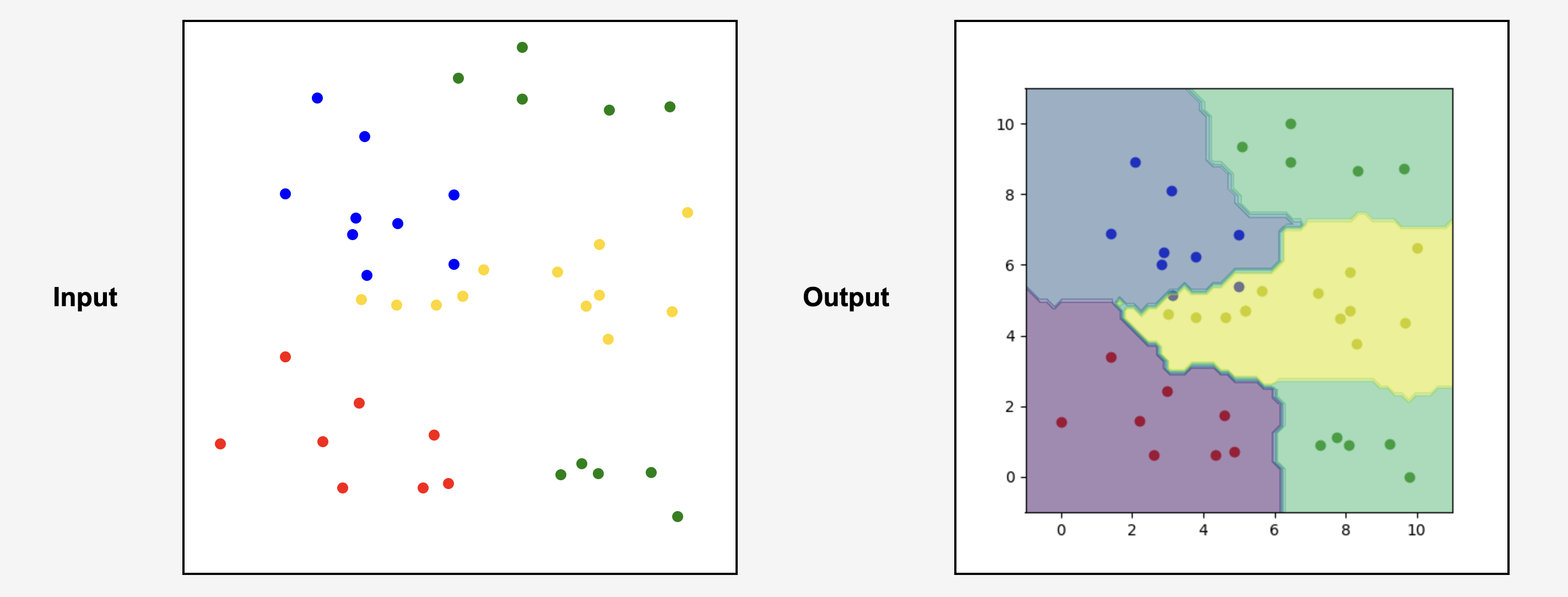

Example here: I created a meshgrid and predicted every point on it. The graph contours should what label would be predicted if the data point existed at (x, y)

I was playing around with different looking inputs and trying to see the boundaries. It was fun. I deployed it here if you want to do the same: https://knn-implementation.onrender.com/ Pls don’t abuse. This runs on a free tier, and is very slow. If it does not load, wait for a minute till it spins up again lol